Extended Convergence to Continuous in Probability Processes With Independent Increments

In a previous post, it was seen that all continuous processes with independent increments are Gaussian. We move on now to look at a much more general class of independent increments processes which need not have continuous sample paths. Such processes can be completely described by their jump intensities, a Brownian term, and a deterministic drift component. However, this class of processes is large enough to capture the kinds of behaviour that occur for more general jump-diffusion processes. An important subclass is that of Lévy processes, which have independent and stationary increments. Lévy processes will be looked at in more detail in the following post, and includes as special cases, the Cauchy process, gamma processes, the variance gamma process, Poisson processes, compound Poisson processes and Brownian motion.

Recall that a process has the independent increments property if

is independent of

for all times

. More generally, we say that X has the independent increments property with respect to an underlying filtered probability space

if it is adapted and

is independent of

for all

. In particular, every process with independent increments also satisfies the independent increments property with respect to its natural filtration. Throughout this post, I will assume the existence of such a filtered probability space, and the independent increments property will be understood to be with regard to this space.

The process X is said to be continuous in probability if in probability as s tends to t. As we now state, a d-dimensional independent increments process X is uniquely specified by a triple

where

is a measure describing the jumps of X,

determines the covariance structure of the Brownian motion component of X, and b is an additional deterministic drift term.

Theorem 1 Let X be an

-valued process with independent increments and continuous in probability. Then, there is a unique continuous function

,

such that

and

![\displaystyle {\mathbb E}\left[e^{ia\cdot (X_t-X_0)}\right]=e^{i\psi_t(a)}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%7B%5Cmathbb+E%7D%5Cleft%5Be%5E%7Bia%5Ccdot+%28X_t-X_0%29%7D%5Cright%5D%3De%5E%7Bi%5Cpsi_t%28a%29%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

(1) for all

and

. Also,

can be written as

![\displaystyle \psi_t(a)=ia\cdot b_t-\frac{1}{2}a^{\rm T}\Sigma_t a+\int _{{\mathbb R}^d\times[0,t]}\left(e^{ia\cdot x}-1-\frac{ia\cdot x}{1+\Vert x\Vert}\right)\,d\mu(x,s)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Cpsi_t%28a%29%3Dia%5Ccdot+b_t-%5Cfrac%7B1%7D%7B2%7Da%5E%7B%5Crm+T%7D%5CSigma_t+a%2B%5Cint+_%7B%7B%5Cmathbb+R%7D%5Ed%5Ctimes%5B0%2Ct%5D%7D%5Cleft%28e%5E%7Bia%5Ccdot+x%7D-1-%5Cfrac%7Bia%5Ccdot+x%7D%7B1%2B%5CVert+x%5CVert%7D%5Cright%29%5C%2Cd%5Cmu%28x%2Cs%29+&bg=ffffff&fg=000000&s=0&c=20201002)

(2) where

,

and

are uniquely determined and satisfy the following,

is a continuous function from

to

such that

and

is positive semidefinite for all

.

is a continuous function from

to

, with

.

is a Borel measure on

with

,

for all

and,

![\displaystyle \int_{{\mathbb R}^d\times[0,t]}\Vert x\Vert^2\wedge 1\,d\mu(x,s)<\infty.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Cint_%7B%7B%5Cmathbb+R%7D%5Ed%5Ctimes%5B0%2Ct%5D%7D%5CVert+x%5CVert%5E2%5Cwedge+1%5C%2Cd%5Cmu%28x%2Cs%29%3C%5Cinfty.+&bg=ffffff&fg=000000&s=0&c=20201002)

(3) Furthermore,

uniquely determine all finite distributions of the process

.

Conversely, if

is any triple satisfying the three conditions above, then there exists a process with independent increments satisfying (1,2).

Equation (2) is an extension of the Lévy-Khintchine formula to inhomogeneous processes. In the case where X has stationary increments (i.e., it is a Lévy process) the statement above simplifies. Then, it is possible to write ,

and

for parameters

,

and measure

on

. In that case, (2) reduces to the standard Lévy-Khintchine formula, which I look at in more detail in the following post. It should be mentioned that the term

inside the integral in (2) is only there to ensure that the integrand is bounded by a multiple of

, so that it is

-integrable. It could just as easily be replaced by any other bounded term of the form

for a function

as

. For example, the alternative

is often used instead. Changing this term does not alter the expression (2), with the differences in the integral simply being absorbed into the drift term b. If

is finite, it is often convenient to drop this term completely.

The proof of Theorem 1 will occupy most of this post. For more details on the ideas used in this post see, for example, Kallenberg (Foundations of Modern Probability). Note that, by the independence of the increments of X, equation (1) determines the characteristic function of ,

for all . By independence of the increments again, this uniquely determines all the finite distributions of

.

As it is stated, Theorem 1 shows that the parameters uniquely determine the finite distributions of X, since they determine its characteristic function. However, the way in which these terms relate to the paths of the process can be explained in more detail. Roughly speaking,

describes the intensity of the jumps of X,

describes the covariance structure, and quadratic variation, of its Brownian motion component, and b is an additional drift component.

Theorem 2 Any

-valued stochastic process X which is continuous in probability with independent increments has a cadlag modification. If it is assumed that X is cadlag, then

as in Theorem 1 are as follows.

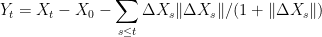

- The process

(4) is integrable, and

. Furthermore,

is a martingale.

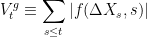

- The process X decomposes uniquely as

where W is a continuous centered Gaussian process with independent increments and

, Y is a semimartingale with independent increments whose quadratic variation has zero continuous part,

. Then, W and Y are independent, Y has parameters

and

![\displaystyle \Sigma^{ij}_t=[W^i,W^j]_t={\rm Cov}(W^i_t,W^j_t).](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5CSigma%5E%7Bij%7D_t%3D%5BW%5Ei%2CW%5Ej%5D_t%3D%7B%5Crm+Cov%7D%28W%5Ei_t%2CW%5Ej_t%29.+&bg=ffffff&fg=000000&s=0&c=20201002)

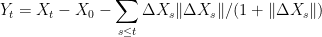

(5) - For any nonnegative measurable

,

![\displaystyle \mu(f)={\mathbb E}\left[\sum_{t>0}1_{\{\Delta X_t\not=0\}}f(\Delta X_t,t)\right].](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Cmu%28f%29%3D%7B%5Cmathbb+E%7D%5Cleft%5B%5Csum_%7Bt%3E0%7D1_%7B%5C%7B%5CDelta+X_t%5Cnot%3D0%5C%7D%7Df%28%5CDelta+X_t%2Ct%29%5Cright%5D.+&bg=ffffff&fg=000000&s=0&c=20201002)

(6) In particular, for any measurable

the random variable

(7) is almost surely infinite whenever

is infinite, and has the Poisson distribution of rate

otherwise. If

are disjoint subsets of

then

are independent random variables.

Furthermore, letting

be the predictable sigma-algebra and

be

-measurable such that

and

is integrable (resp. locally integrable) then,

![\displaystyle M_t\equiv\sum_{s\le t}f(\Delta X_s,s)-\int_{{\mathbb R}^d\times[0,t]}f(x,s)\,d\mu(x,s)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++M_t%5Cequiv%5Csum_%7Bs%5Cle+t%7Df%28%5CDelta+X_s%2Cs%29-%5Cint_%7B%7B%5Cmathbb+R%7D%5Ed%5Ctimes%5B0%2Ct%5D%7Df%28x%2Cs%29%5C%2Cd%5Cmu%28x%2Cs%29+&bg=ffffff&fg=000000&s=0&c=20201002)

is a martingale (resp. local martingale).

Along with Theorem 1, the above result will be proven bit-by-bit during this post. In the decomposition of X given in the second statement, the process Y has zero Gaussian component or, equivalently, its quadratic covariations are pure jump processes. The term is the purely discontinuous part of X and the Gaussian process W is the purely continuous part. More generally, an independent increments process is said to be purely discontinuous if it has parameters

, so the Brownian motion component is zero. It can be seen from expression (2) for the characteristic function that W has parameters

.

The third statement shows that describes the intensity at which the jumps of X occur and, more specifically, shows that they arrive according to the Poisson distribution with rate given by

. The random variables

given by equation (7) define a Poisson point process (aka, Poisson random measure). Poisson point processes can be used to construct processes with independent increments. Although I do not explicitly take that approach here, more details can be found in Kallenberg. The final statement shows that the measure

defines the compensator of the sum

. That is, we can construct a continuous FV process A such that

is a local martingale.

Let us now move on to proving the main results of this post. Throughout, we assume that the underlying filtered probability space is complete. We start by showing that cadlag modifications exist. As there is no requirement here for the process X to have stationary increments, it will not in general be a homogeneous Markov process. However, the space-time process

will be homogeneous Markov and, in fact, is Feller.

Lemma 3 Let X be an

valued process with independent increments and continuous in probability. For each

, define the transition probability

on

by

![\displaystyle P_tf(x,s)={\mathbb E}[f(X_{s+t}-X_s+x,s+t)]](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++P_tf%28x%2Cs%29%3D%7B%5Cmathbb+E%7D%5Bf%28X_%7Bs%2Bt%7D-X_s%2Bx%2Cs%2Bt%29%5D+&bg=ffffff&fg=000000&s=0&c=20201002)

(8) for nonnegative measurable

.

Then,

is a Markov process with Feller transition function

. In particular, X has a cadlag modification.

Proof: The existence of cadlag modifications is a standard property of Feller processes. So, it just needs to be shown that the specified transition probabilities do indeed define a Feller transition function with respect to which X is a Markov process.

By the independent increments property, (8) can be rewritten as

| | (9) |

which remains true if x is replaced by an -measurable random variable. To show that this defines a transition function, the Chapman-Kolmogorov equation

needs to be verified,

Here, the second equality is simply using (9) with s+t in place of s and in place of x. Substituting

in place of x in (9) gives

so X is Markov with transition function .

Only the Feller property remains. For this it needs to be shown that, for any then

and

in the pointwise topology as t tends to zero. Recall that

means that

is continuous and

as

. Choosing sequences

and

, continuity in probability of X and bounded convergence gives

So is continuous. Similarly, if

then

tends to infinity in probability and, again by bounded convergence,

So as required.

Finally, choose . Bounded convergence again gives

so that is Feller as required. ⬜

So, from now on, it can be assumed that X is cadlag. We can show directly that, as stated in Theorem 1, the jumps of X do indeed occur according to the Poisson distribution with rate determined by .

Lemma 4 Let X be a cadlag

-valued process with independent increments and continuous in probability. Define the measure

on

by (6) and, for each measurable

define the random variable

by (7).

Proof: It follows directly from the definitions that , so that

is almost surely finite whenever

. Conversely, suppose that

is almost surely finite. Then we can define the process

. So,

is just the number of times

at which

is in A. This is a counting process which is continuous in probability and, as

depends only on the increments of X in the interval [s,t], it satisfies the independent increments process. Hence,

is a Poisson process with cumulative rate

. Letting t increase to infinity, we see that

is Poisson with rate

, which must therefore be finite.

Now, for any the fact that X is cadlag means that, with probability one,

can only be greater than

finitely often before time t. So, letting A be the set of

with

and

,

is almost surely finite. As shown above, this implies that

.

Next, suppose that is such that

. Then there are subsets

increasing to A with

finite. So,

are Poisson of rate

, which increases to infinity as n goes to infinity, implying that

is almost surely infinite.

Finally, suppose that are pairwise disjoint. By taking limits of sets with finite

-measure, if required, we can restrict to the case where

. Then, the Poisson processes

never jump simultaneously and are therefore independent. So,

are independent random variables. ⬜

We now move on to proving equations (1,2). For this, we recall the following result from Lemma 3 of the post on continuous processes with independent increments.

Lemma 5 Let X be an

-valued process with independent increments and continuous in probability. Then, there is a unique continuous function

,

with

and satisfying equation (1).

Furthermore,

is a martingale for each fixed

.

In the case of continuous processes, Ito's formula was applied to the logarithm of the martingale to determine

up to a square integrable martingale term (see Lemma 4 of the earlier post). We can do exactly the same thing here using the generalized Ito formula, which applies to all semimartingales.

Lemma 6 Let X be a cadlag

-valued process with independent increments and continuous in probability. Then, there is a continuous function

such that

is a semimartingale. Letting

be as in Lemma 5, the process

![\displaystyle M_t\equiv ia\cdot(X_t-X_0)-\psi_t(a)-\frac12[a\cdot\tilde X]^c_t+\sum_{s\le t}\left(e^{ia\cdot\Delta X_s}-1-ia\cdot\Delta X_s\right)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++M_t%5Cequiv+ia%5Ccdot%28X_t-X_0%29-%5Cpsi_t%28a%29-%5Cfrac12%5Ba%5Ccdot%5Ctilde+X%5D%5Ec_t%2B%5Csum_%7Bs%5Cle+t%7D%5Cleft%28e%5E%7Bia%5Ccdot%5CDelta+X_s%7D-1-ia%5Ccdot%5CDelta+X_s%5Cright%29+&bg=ffffff&fg=000000&s=0&c=20201002)

is a square integrable martingale.

Proof: We argue in a similar way is in the proof of Lemma 4 from the post on continuous processes with independent increments. In fact, the proof is almost word-for-word the same as that given in the previous post, the main difference here being the use of the generalized Ito formula.

Fix and set

. By the previous lemma,

is a martingale and, hence, is a semimartingale. So, Ito's lemma implies that

is also a semimartingale. Note that, although the logarithm is not a well-defined twice differentiable function on

, this is true locally (in fact, on every half-plane). So, there is no problem with applying Ito's lemma here.

Taking imaginary parts of Y shows that is a semimartingale. So, defining the continuous functions

by

where

is the unit vector in the k'th dimension,

is a semimartingale.

Applying the generalized Ito formula to gives,

As , U is uniformly bounded over any finite time interval and, in particular, is a square integrable martingale. Similarly,

is bounded over finite time intervals, so

| | (10) |

is a square integrable martingale.

Now, Y can be written as for the process

which is both a semimartingale and a deterministic process. Hence, V is of finite variation over all finite time intervals. As FV processes do not contribute to continuous parts of quadratic variations,

Substituting this and the definition of Y back into (10) gives the required expression for M. ⬜

Taking expectations of the martingale M defined in Lemma 6 gives us an expression for which, with a bit of work, gives the proof of Theorem 1 in one direction. Lemma 7 below completes much of the proofs required in this post. It will only remain to prove that the coefficients

are uniquely determined by equation (2), that we can construct an independent increments process corresponding to such parameters, and the decomposition given in the second statement of Theorem 2 and the final statement of Theorem 2 also remain to be shown.

Lemma 7 Let X be a cadlag

-valued process with independent increments and continuous in probability. Define the following.

- The process

is uniformly integrable over finite time intervals, so we can define the continuous function

by

and, then,

is a martingale.

- With b as above,

is a semimartingale, so we can set

![\displaystyle \Sigma^{ij}_t={\mathbb E}\left[[\tilde X^i,\tilde X^j]^c_t\right].](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5CSigma%5E%7Bij%7D_t%3D%7B%5Cmathbb+E%7D%5Cleft%5B%5B%5Ctilde+X%5Ei%2C%5Ctilde+X%5Ej%5D%5Ec_t%5Cright%5D.+&bg=ffffff&fg=000000&s=0&c=20201002)

(11) - Define the measure

on

by (6).

Then,

satisfy the properties stated in Theorem 1 and identity (2) holds.

Proof: Let M be the square integrable martingale defined in Lemma 6. Applying the Ito isometry to the real and imaginary components of M respectively,

which is finite. However, M has jump , so that

. It follows that

Since as

we have shown that

Also, Lemma 4 states that has finite measure on the set of

with

and

, giving inequality (3). As

by definition and

by continuity in probability of X,

satisfies the required properties.

Defining the process Y by (4), we can rearrange the expression for M,

| | (12) |

The terms inside the summation are bounded and of order , so are bounded by a multiple of

. So, inequality (3) says that the summation is bounded by an integrable random variable over finite time intervals, so the first two terms of the above expression for M are also bounded by an integrable random variable. Taking the real and imaginary parts shows that Y and

are also bounded by an integrable random variable over any finite time interval. So, we can define

which, by dominated convergence, are continuous. As is increasing,

is positive semidefinite for all

. So, we have shown that

satisfy the required properties.

Taking expectations of (12),

giving equation (2) for .

Finally, note that is an integrable process with zero mean and independent increments, so is a martingale. Then

is the sum of the martingale

and an FV process, so is a semimartingale. Recall also, from Lemma 6, that

was defined to be

for any continuous deterministic process

making

into a semimartingale, so we may as well take

. ⬜

This almost completes the proof of Theorem 1. Other than constructing the process X from , which will be done below, it only remains to show that

are uniquely determined by the identity (2). This will follow from the fact that any different set of parameters

give rise to a different process, using the construction described below. However, (2) can also be inverted by applying a Fourier transform. If

is a Schwartz function, then integrating (2) against its Fourier transform

gives,

| | (13) |

Here, and

represent the partial derivatives of f, and summation over the indices j, k is understood. If f is zero in a neighbourhood of the origin, then (13) reduces to

, so it uniquely determines

. Then,

and

can be read off from (13).

We now prove the final statement of Theorem 2, which constructs the compensator of a sum over the jumps of an independent increments process in terms of the measure .

Lemma 8 Let X be a d-dimensional cadlag independent increments process with

as in Theorem 1 and let

be

-measurable such that

and

is integrable (resp. locally integrable). Then,

is integrable (resp. locally integrable) and

![\displaystyle M^f_t\equiv\sum_{s\le t}f(\Delta X_s,s)-\int_{{\mathbb R}^d\times[0,t]}f(x,s)\,d\mu(x,s)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++M%5Ef_t%5Cequiv%5Csum_%7Bs%5Cle+t%7Df%28%5CDelta+X_s%2Cs%29-%5Cint_%7B%7B%5Cmathbb+R%7D%5Ed%5Ctimes%5B0%2Ct%5D%7Df%28x%2Cs%29%5C%2Cd%5Cmu%28x%2Cs%29+&bg=ffffff&fg=000000&s=0&c=20201002)

is a martingale (resp. local martingale).

Proof: Choose an . If

for some constant K and

for

then (6) gives

which, by (3), is finite.

First, consider f of the form for some bounded measurable

with

for

. Then, by (6),

has zero mean. Also, is a function of

and, therefore, is independent of

. So,

is a martingale.

Next, consider where

is a bounded predictable process and g is as above. Setting

gives

As stochastic integration preserves the local martingale property this is a local martingale and, as it has integrable variation over finite time intervals, it will be a proper martingale. The idea is to apply the functional monotone class theorem to extend this to all bounded f with for

. Linearity is clear. That is, if

are martingales then

are martingales and, for a constant

,

is a martingale.

Now consider a sequence of nonnegative

-measurable functions increasing to a limit f such that

are martingales,

, and that

is integrable. By monotone convergence,

This shows that is integrable and

converges to

in

. So,

is a martingale. By the functional monotone class theorem, this implies that

is a martingale for all bounded

-measurable functions f with

for

.

Now suppose that f is -measurable with

and that

is integrable. Writing f as the difference of its positive and negative parts, we can reduce the problem to nonnegative f. However, in that case, the functions

increase to f. As shown above,

are integrable,

are martingales, and

and

in

. So,

is integrable and

is a martingale.

Finally, suppose that and

is locally integrable. Choose stopping times

increasing to infinity such that the stopped process

is integrable. Then, setting

,

is integrable. So, by the above,

is integrable and

is a martingale. ⬜

Constructing independent increments processes

Having proven that equation (2) holds for an independent increments process X, it remains to construct the process given parameters . In the case where

then (2) shows that X will be Gaussian, and can be constructed as described in the post on continuous independent increments processes. We, therefore, concentrate on the purely discontinuous case. Then, from Lemma 4 we know precisely how the jumps of X are distributed. So, to reconstruct X, the method is clear enough. We just generate its jumps

according to the Poisson distributions described above, then sum these up to obtain X. However, there is one problem. The process X can have infinitely many jumps over any finite time interval and, in fact, the sum of their absolute values can be infinite (as is the case for the Cauchy process, for example). So, the result of the sum

will depend on the order of summation. We avoid these issues by starting with the case where the jump measure

is finite, so the process has only finitely many jumps.

Lemma 9 Let

be a finite measure on

such that

and

for every

.

Let

be a sequence of independent

-valued random variables with distribution

, and N be an independent Poisson distributed random variable of rate

. Setting,

![\displaystyle \setlength\arraycolsep{2pt} \begin{array}{rl} &\displaystyle X_t\equiv\sum_{n=1}^N1_{\{T_n\le t\}}Z_n-b_t,\smallskip\\ &\displaystyle b_t\equiv\int_{{\mathbb R}^d\times[0,t]}\frac{x}{1+\Vert x\Vert}\,d\mu(x,s), \end{array}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Csetlength%5Carraycolsep%7B2pt%7D+%5Cbegin%7Barray%7D%7Brl%7D+%26%5Cdisplaystyle+X_t%5Cequiv%5Csum_%7Bn%3D1%7D%5EN1_%7B%5C%7BT_n%5Cle+t%5C%7D%7DZ_n-b_t%2C%5Csmallskip%5C%5C+%26%5Cdisplaystyle+b_t%5Cequiv%5Cint_%7B%7B%5Cmathbb+R%7D%5Ed%5Ctimes%5B0%2Ct%5D%7D%5Cfrac%7Bx%7D%7B1%2B%5CVert+x%5CVert%7D%5C%2Cd%5Cmu%28x%2Cs%29%2C+%5Cend%7Barray%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

then X is an independent increments process with parameters

.

Proof: We can prove this by a direct computation of the characteristic function of the increments of X and then comparing with (2).

Fix any times and let

and

. Independence of the variables

conditional on N gives

for any . Then, noting that, conditional on

,

is the sum of

independent random variables with distribution given by

,

where . Writing the right hand side of this as

for brevity, we can use the fact that, conditional on N,

has the multinomial distribution to get

By the Poisson distribution, the probability that N equals any nonnegative integer n is giving,

So, the characteristic function of is the product of the characteristic functions of the increments

, showing that Y is an independent increments process. The characteristic function of

is then,

Comparing with (2) we see that this is of the correct form. ⬜

Lemma 9 needs to be extended to cope with processes with infinitely many jumps. This can be done by writing such a process as an infinite sum of processes, each with finitely many jumps, and convergence can be determined by looking at the explicit expressions for their characteristic functions.

Lemma 10 Let

be measures on

such that

satisfies the conditions of Theorem 1.

Also, let

be a sequence of independent processes such that

is an

-valued independent increments process with parameters

and

. Then,

converges in probability to the independent increments process X with parameters

.

Proof: Let us set . As a sum of the independent processes

, each of which has the independent increments property, it follows that

satisfy the independent increments property. We just need to show that they converge to the process X. For any

, independence of the processes

gives

The integrand on the right hand side is bounded by for some constant K and, therefore, the exponent is bounded by

By dominated convergence, this goes to zero as m, n go to infinity. So, goes to zero. Therefore,

tend to zero in probability and, by completeness of

, the sequence

converges in probability to a limit

. By dominated convergence,

as required. ⬜

Putting this together, we can use Lemma 9 to construct independent increments processes with finitely many jumps and, then, by summing these, we obtain an independent increments process with arbitrary jump measure . Adding on the drift term and Gaussian component gives the process X as required, completing the proof of Theorem 1.

Lemma 11 Let

satisfy the conditions of Theorem 1 and define the following.

Then,

is an independent increments process with parameters

.

Proof: This is just a direct application of Lemma 10, and expression (1) follows from the independence of W and Y and the characteristic function of ,

⬜

It only remains to prove the decomposition of X given in the second statement of Theorem 2. We do this now, by making use of the construction given above.

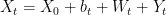

Lemma 12 Let X be a cadlag independent increments process with parameters

and, for each

let

be as in (14), so that

. Setting

(15) the sum

converges in probability, and Y is an independent increments process with parameters

. Furthermore, X decomposes as a sum of independent processes

where W is an independent increments process possessing a continuous modification and

for all

.

Proof: First, replacing X by if necessary, we suppose that

. As the distribution of X is fully determined by

, we may as well suppose that X has been constructed according to Lemma 11. It then only needs to be shown that the processes

defined in Lemma 11 satisfy (15). First, by the construction given in Lemma 9 for independent increments processes with parameters

we see that

whenever

and,

| | (16) |

Next, by Lemma 10 the process has independent increments with parameters

where

As , the jumps

are never in

, so we can rewrite (16) as,

⬜

This just about completes the proof of all the statements above. Only one small point remains — in Theorem 2 it was stated that the decomposition into a continuous centered Gaussian process W and independent increments process Y with

is unique. That the process Y given by Lemma 12 has quadratic variation with zero continuous part follows from equation (11) and the fact that it has parameters

. Uniqueness follows from the fact that if

is any other such decomposition then

is continuous. As

are both purely discontinuous independent increments processes with the same jumps, the construction above shows that they are equal.

Source: https://almostsuremath.com/2010/09/15/processes-with-independent-increments/

0 Response to "Extended Convergence to Continuous in Probability Processes With Independent Increments"

Post a Comment